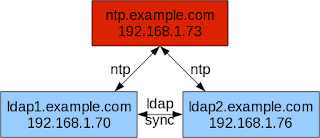

There are different type how to replicate your LDAP servers, I'll show you how to setup N-Way Multi-Master replication. Begin with your name resolution. Add all hosts (NTP server and the two LDAP servers) to /etc/hosts:

# vi /etc/hosts

...

192.168.1.73 ntp.example.com ntp

192.168.1.70 ldap1.example.com ldap1

192.168.1.76 ldap2.example.com ldap2

...

Check your /etc/nsswitch.conf that it can look for hosts in files:

# vi /etc/nsswitch.conf

...

hosts: files dns

...

In case that your DNS shuts down then the LDAP servers still know each other by looking into /etc/hosts which could get important. Next make slapd ready for replication. I assume that you have already two LDAP server up and running. My current slapd.conf looks like this for both servers:

# cat /etc/openldap/slapd.conf

# SCHEMES

include /etc/openldap/schema/core.schema

include /etc/openldap/schema/cosine.schema

include /etc/openldap/schema/inetorgperson.schema

include /etc/openldap/schema/nis.schema

# ACL

include /etc/openldap/acl.conf

# GENERIC

pidfile /var/run/slapd/slapd.pid

argsfile /var/run/slapd/slapd.args

suffix "dc=example,dc=com"

rootdn "cn=ldapadmin,dc=example,dc=com"

rootpw {SSHA}ycE5W7XnPe7xShTNOVbVywxutH4Dgdiy

password-hash {MD5}

loglevel 256

sizelimit unlimited

allow bind_v2 bind_anon_dn

# DATABASE

database bdb

directory /var/lib/ldap/example.com

# BIND INDEX

index objectClass eq

index uid eq

index cn eq

index memberUid eq

index uidNumber eq

It holds a couple of schemes, a place for the ACL's, database etc. Next add the replication configuration to the slapd.conf on both servers:

# vi /etc/openldap/slapd.conf

...

# REPLICATION

ServerID 1 "ldap://ldap1.example.com"

ServerID 2 "ldap://ldap2.example.com"

moduleload syncprov

overlay syncprov

syncprov-checkpoint 10 1

syncprov-sessionlog 100

syncrepl rid=1

provider="ldap://192.168.1.70"

type=refreshAndPersist

schemachecking=on

retry="5 10 30 +"

searchbase="dc=example,dc=com"

bindmethod=simple

binddn="cn=ldapadmin,dc=example,dc=com"

credentials="secret_clear_text_password"

syncrepl rid=2

provider="ldap://192.168.1.76"

type=refreshAndPersist

schemachecking=on

retry="5 10 30 +"

searchbase="dc=example,dc=com"

bindmethod=simple

binddn="cn=ldapadmin,dc=example,dc=com"

credentials="secret_clear_text_password"

MirrorMode on

...

It contains both servers by FQDN, searchbase, credentials, will load the syncprov module etc. Now make sure the DIT on ldap2 is empty and that the slapd on ldap2 is not running. Then restart the slapd on ldap1:

# ssh root@ldap2

...

# kill -15 `cat /var/run/slapd/slapd.pid`

# pgrep -fl slapd

# ssh root@ldap1

...

# kill -15 `cat /var/run/slapd/slapd.pid`

# pgrep -fl slapd

# /usr/libexec/slapd -h ldap://192.168.1.70:389

If you have configured slapd for logging then you should see something similar to this on ldap1:

# tail -f /var/log/ldap.log

...

Nov 27 15:51:40 ldap1 slapd[12403]: @(#) $OpenLDAP: slapd 2.4.23 (Nov 8 2011 21:16:17) $ ^Iroot@ldap1:/tmp/openldap-2.4.23/servers/slapd

Nov 27 15:51:41 ldap1 slapd[12404]: bdb_monitor_db_open: monitoring disabled; configure monitor database to enable

Nov 27 15:51:41 ldap1 slapd[12404]: slapd starting

Nov 27 15:51:41 ldap1 slapd[12404]: slap_client_connect: URI=ldap://192.168.1.76 DN="cn=ldapadmin,dc=example,dc=com" ldap_sasl_bind_s failed (-1)

Nov 27 15:51:41 ldap1 slapd[12404]: do_syncrepl: rid=002 rc -1 retrying (9 retries left)

Nov 27 15:51:41 ldap1 slapd[12404]: conn=1000 fd=12 ACCEPT from IP=192.168.1.70:41548 (IP=192.168.1.70:389)

Nov 27 15:51:41 ldap1 slapd[12404]: conn=1000 op=0 BIND dn="cn=ldapadmin,dc=example,dc=com" method=128

Nov 27 15:51:41 ldap1 slapd[12404]: conn=1000 op=0 BIND dn="cn=ldapadmin,dc=example,dc=com" mech=SIMPLE ssf=0

Nov 27 15:51:41 ldap1 slapd[12404]: conn=1000 op=0 RESULT tag=97 err=0 text=

Nov 27 15:51:41 ldap1 slapd[12404]: conn=1000 op=1 SRCH base="dc=example,dc=com" scope=2 deref=0 filter="(objectClass=*)"

Nov 27 15:51:41 ldap1 slapd[12404]: conn=1000 op=1 SRCH attr=* +

Nov 27 15:51:46 ldap1 slapd[12404]: slap_client_connect: URI=ldap://192.168.1.76 DN="cn=ldapadmin,dc=example,dc=com" ldap_sasl_bind_s failed (-1)

Nov 27 15:51:46 ldap1 slapd[12404]: do_syncrepl: rid=002 rc -1 retrying (8 retries left)

You can see in the last to lines that the slapd tries to replicate the DIT to ldap2 (192.168.1.76). Now start the slapd on ldap2:

# ssh root@ldap2

...

/usr/libexec/slapd -h ldap://192.168.1.76:389

Now check the logfile on ldap1:

# ssh root@ldap1

...

# tail -f /var/log/ldap.log

...

Nov 27 15:55:42 ldap1 slapd[12404]: conn=1001 fd=14 ACCEPT from IP=192.168.1.76:34032 (IP=192.168.1.70:389)

Nov 27 15:55:42 ldap1 slapd[12404]: conn=1001 op=0 BIND dn="cn=ldapadmin,dc=example,dc=com" method=128

Nov 27 15:55:42 ldap1 slapd[12404]: conn=1001 op=0 BIND dn="cn=ldapadmin,dc=example,dc=com" mech=SIMPLE ssf=0

Nov 27 15:55:42 ldap1 slapd[12404]: conn=1001 op=0 RESULT tag=97 err=0 text=

Nov 27 15:55:42 ldap1 slapd[12404]: conn=1001 op=1 SRCH base="dc=example,dc=com" scope=2 deref=0 filter="(objectClass=*)"

Nov 27 15:55:42 ldap1 slapd[12404]: conn=1001 op=1 SRCH attr=* +

And on ldap2:

# ssh root@ldap2

...

# tail -f /var/log/ldap.log

...

Nov 27 15:55:42 ldap2 slapd[16336]: [ID 702911 local4.debug] @(#) $OpenLDAP: slapd 2.4.26 (Nov 20 2011 10:58:39) $

Nov 27 15:55:42 ldap2 root@ldap2:/usr/share/src/openldap-2.4.26/servers/slapd

Nov 27 15:55:42 ldap2 slapd[16337]: [ID 468869 local4.debug] bdb_monitor_db_open: monitoring disabled; configure monitor database to enable

Nov 27 15:55:42 ldap2 slapd[16337]: [ID 100111 local4.debug] slapd starting

Nov 27 15:55:42 ldap2 slapd[16337]: [ID 848112 local4.debug] conn=1000 fd=13 ACCEPT from IP=192.168.1.76:34033 (IP=192.168.1.76:389)

Nov 27 15:55:42 ldap2 slapd[16337]: [ID 215403 local4.debug] conn=1000 op=0 BIND dn="cn=ldapadmin,dc=example,dc=com" method=128

Nov 27 15:55:42 ldap2 slapd[16337]: [ID 600343 local4.debug] conn=1000 op=0 BIND dn="cn=ldapadmin,dc=example,dc=com" mech=SIMPLE ssf=0

Nov 27 15:55:42 ldap2 slapd[16337]: [ID 588225 local4.debug] conn=1000 op=0 RESULT tag=97 err=0 text=

Nov 27 15:55:42 ldap2 slapd[16337]: [ID 469902 local4.debug] conn=1000 op=1 SRCH base="dc=example,dc=com" scope=2 deref=0 filter="(objectClass=*)"

Nov 27 15:55:42 ldap2 slapd[16337]: [ID 744844 local4.debug] conn=1000 op=1 SRCH attr=* +

Nov 27 15:55:42 ldap2 slapd[16337]: [ID 405749 local4.debug] findbase failed! 32

Nov 27 15:55:42 ldap2 slapd[16337]: [ID 832699 local4.debug] conn=1000 op=1 SEARCH RESULT tag=101 err=32 nentries=0 text=

Nov 27 15:55:42 ldap2 slapd[16337]: [ID 449217 local4.debug] do_syncrep2: rid=002 LDAP_RES_SEARCH_RESULT (32) No such object

Nov 27 15:55:42 ldap2 slapd[16337]: [ID 539778 local4.debug] do_syncrep2: rid=002 (32) No such object

Nov 27 15:55:42 ldap2 slapd[16337]: [ID 218904 local4.debug] conn=1000 op=2 UNBIND

Nov 27 15:55:42 ldap2 slapd[16337]: [ID 952275 local4.debug] conn=1000 fd=13 closed

Nov 27 15:55:42 ldap2 slapd[16337]: [ID 445809 local4.debug] do_syncrepl: rid=002 rc -2 retrying (9 retries left)

Nov 27 15:55:47 ldap2 slapd[16337]: [ID 848112 local4.debug] conn=1001 fd=18 ACCEPT from IP=192.168.1.76:34034 (IP=192.168.1.76:389)

Nov 27 15:55:47 ldap2 slapd[16337]: [ID 215403 local4.debug] conn=1001 op=0 BIND dn="cn=ldapadmin,dc=example,dc=com" method=128

Nov 27 15:55:47 ldap2 slapd[16337]: [ID 600343 local4.debug] conn=1001 op=0 BIND dn="cn=ldapadmin,dc=example,dc=com" mech=SIMPLE ssf=0

Nov 27 15:55:47 ldap2 slapd[16337]: [ID 588225 local4.debug] conn=1001 op=0 RESULT tag=97 err=0 text=

Nov 27 15:55:47 ldap2 slapd[16337]: [ID 469902 local4.debug] conn=1001 op=1 SRCH base="dc=example,dc=com" scope=2 deref=0 filter="(objectClass=*)"

Nov 27 15:55:47 ldap2 slapd[16337]: [ID 744844 local4.debug] conn=1001 op=1 SRCH attr=* +

Nov 27 15:56:01 ldap2 slapd[16337]: [ID 848112 local4.debug] conn=1002 fd=19 ACCEPT from IP=192.168.1.70:39106 (IP=192.168.1.76:389)

Nov 27 15:56:01 ldap2 slapd[16337]: [ID 215403 local4.debug] conn=1002 op=0 BIND dn="cn=ldapadmin,dc=example,dc=com" method=128

Nov 27 15:56:01 ldap2 slapd[16337]: [ID 600343 local4.debug] conn=1002 op=0 BIND dn="cn=ldapadmin,dc=example,dc=com" mech=SIMPLE ssf=0

Nov 27 15:56:01 ldap2 slapd[16337]: [ID 588225 local4.debug] conn=1002 op=0 RESULT tag=97 err=0 text=

Nov 27 15:56:01 ldap2 slapd[16337]: [ID 469902 local4.debug] conn=1002 op=1 SRCH base="dc=example,dc=com" scope=2 deref=0 filter="(objectClass=*)"

Nov 27 15:56:01 ldap2 slapd[16337]: [ID 744844 local4.debug] conn=1002 op=1 SRCH attr=* +

The last lines indicates the login and replication from ldap1 (192.168.1.7.70). Your replication should work now. Finally choose any client and configure ldap.conf so it can use both LDAP servers:

# vi /etc/ldap.conf

...

BASE dc=example,dc=com

URI ldap://192.168.1.70:389 ldap://192.168.1.76:389

...

Add both servers as URI. When you now shutdown any LDAP server then the client can still use the remaining LDAP server. When both LDAP server are online again they will replicate each other to get a consistent state again.

Excellent post, I've been considering LDAP replication in my future plans to migrate to a more reliable "High Availability" setup. It looks like you have covered everything. If you don't mind I'm going to post a link on my blog ( tech.waynesimmerson.ca ) back to this post.

ReplyDelete